MLE2 : Operationalizing Models 2 ~ Build and Deploy Machine Learning Model As API Endpoints

- 1 minThe aim here is to build a Flask app (or a Flask microservice in cloud) that loads a serialized model from disk and uses it to serve out predictions. demonstrate creating an API that can be queries to obtain predictions from a machine learning model. I am using the wine quality dataset from UCI Machine Learning Repository that deals with a prediction problem on the quality of wine given some information around it. My workflow is made up of the following steps -

Train a Machine Learning model using Random Forest on my local machine and serialize it.

Putting the logic (which is the (.predict() function) in a Flask application and serving it as an API

Test the API locally using an API client - ARC or Postman

Flask is a python web framework that allows us to build REST-API web services with minimum configuration hassle.

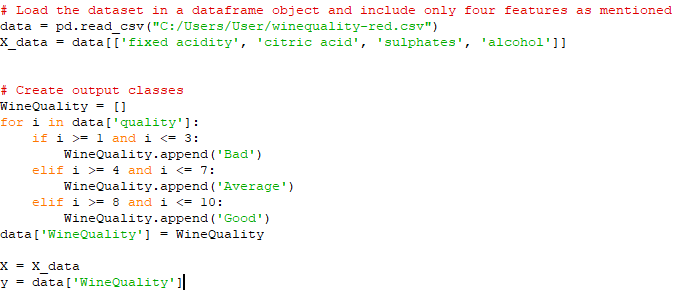

I am first loading the dataset into a dataframe object and only including the key features namely : fixed_acidity, citric_acid, sulphates and alcohol where wine_quality is the class label that is encoded as either Good, Bad or Average.

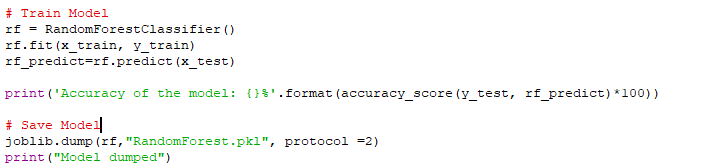

Once pre-processing is done, I will train it using Random Forest classifier. After building the model, I will save it, i.e : serialize using sklearn’s joblib utility. The idea in serializing is to persist my model into memory so that it loads the next time the application starts.

Now that i have my trained model, i am ready to query it to get a class label for a test observation. This will bring me to my next which is step which is creating a Flask app. This translates to instantiating a Flask object called app with a predict() function. It will create an API endpoint that takes the input variables, transform them to the required format to return predictions. This function will accept a ‘POST’ request.